We’ve been using AD authentication with our RHEL and CENTOS 4 and 5 systems for some time, now, so I was anxious to see what kinds of changes might have come up with RHEL6. Not much, happily, but there was one change that took a little while to figure out. We’ll run through all the steps, from beginning to end, here.

Monday, November 29, 2010

Saturday, November 27, 2010

Using Error (and other multiple) Paths in SSIS

SSIS provides for multiple paths between tasks. Very helpful stuff; here we’ll look at a simple solution to a common scenario.

Below is a job that stops a blocking service, reads from the source DB (SQL CE) file, truncates the destination table, and then copies the data from the source to destination. Finally, it starts the service again.

What if, however, our test job fails after stopping the service? We can provide for failure notification through SQL Agent jobs, but wouldn’t it be nice, also, to have the service start again, even after a failure?

We’ll set that up using failure paths, such that the tasks will go directly to the start service task in the event of a failure.

Below is a job that stops a blocking service, reads from the source DB (SQL CE) file, truncates the destination table, and then copies the data from the source to destination. Finally, it starts the service again.

What if, however, our test job fails after stopping the service? We can provide for failure notification through SQL Agent jobs, but wouldn’t it be nice, also, to have the service start again, even after a failure?

We’ll set that up using failure paths, such that the tasks will go directly to the start service task in the event of a failure.

Creating a SSIS SQL Compact Data Source

There’s no out-of-the-box SQL Compact data source in SSIS, which presents a problem when you’re needing to copy data from a SQLCE data file.

It turns out, though, that it’s easy to repurpose a OLEDB connection to read from a SQL Compact DB.

It turns out, though, that it’s easy to repurpose a OLEDB connection to read from a SQL Compact DB.

Wednesday, September 22, 2010

Connection Failed error message with PeopleTools Change Assistant on 64 Bit Windows

No logs, no details. Just failed.

But then, finally, a hint: Data mover (which shouldn't connect, since the database is still at 8.49) wouldn't even run: it failed with an error "missing or invalid version of sql library psora". Aha! Now that is something one can work with.

It turns out it needs the 32-bit Oracle client. Install that, and everything is good, again.

No logs, no details. Just failed.

But then, finally, a hint: Data mover (which shouldn't connect, since the database is still at 8.49) wouldn't even run: it failed with an error "missing or invalid version of sql library psora". Aha! Now that is something one can work with.

It turns out it needs the 32-bit Oracle client. Install that, and everything is good, again.

Friday, July 16, 2010

How to Delegate Services control in Windows

Microsoft offers a very helpful document here and here detailing how to use subinacl to give control over a service to a user. Unfortunately, they’ve not updated that article in quite some time, and it’s now out of date: beginning with Windows Server 2003 SP1, authenticated users no longer can enumerate services.

While that’s a good thing, it renders the solution presented by Microsoft only partially complete.

So we’ll correct that, going through all the steps that are necessary to give an (otherwise) unprivileged user permissions to control any given services through the services control panel. This will work on Windows Server through v2008 R2.

While that’s a good thing, it renders the solution presented by Microsoft only partially complete.

So we’ll correct that, going through all the steps that are necessary to give an (otherwise) unprivileged user permissions to control any given services through the services control panel. This will work on Windows Server through v2008 R2.

Sunday, June 27, 2010

Linux error id: cannot find name for user ID xxxxx when using Domain Authentication

We recently had a problem, after re-doing some samba configurations on RHEL 5, in which a user would log in (successfully), but then be presented with the follow errors:

id: cannot find name for user ID 10001

id: cannot find name for group ID 10000

id: cannot find name for user ID 10001

Of course, none of our domain ACLs worked for this user, either, which was a real problem.

Finally, after running through the more obvious problems (communication with domain controllers: verified with wbinfo; uid and gid allocation and linking: set explicitly with wbinfo; winbind cache (cleared, both in /var/cache/samba and /var/lib/samba); date/time discrepancies; domain membership), we found the culprit: file permissions.

id: cannot find name for group ID 10000

id: cannot find name for user ID 10001

Monday, June 21, 2010

NT_STATUS_PIPE_DISCONNECTED with Samba Winbind and Windows Server 2008 R2 Domain Controller

We recently upgraded our domain controllers to Windows Server 2008 R2, and our RHEL 5 authentication through our Windows domain immediately broke.

Here was the error:

But there is this: https://bugzilla.redhat.com/show_bug.cgi?id=561325

In short: there's a bug in the samba package that prevents it from working with Windows Server 2008 R2 domains. If you’re running into this problem, the solution is to remove your existing samba installation and install, instead, the samba3x packages.

Note that samba3x was a "technology preview" from RedHat, which means that it offered little support for it. This has changed, and it's now a supported package in RHEL 5.

Here was the error:

[2010/06/21 09:32:57, 0] rpc_client/cli_pipe.c:rpc_api_pipe(790)

rpc_api_pipe: Remote machine adserver.my.edu pipe \NETLOGON fnum 0x8007returned critical error. Error was NT_STATUS_PIPE_DISCONNECTED

A little searching online shows a lot of people with this or related problems, but the solutions appear to be many, and there mostly isn’t a solution posited.But there is this: https://bugzilla.redhat.com/show_bug.cgi?id=561325

In short: there's a bug in the samba package that prevents it from working with Windows Server 2008 R2 domains. If you’re running into this problem, the solution is to remove your existing samba installation and install, instead, the samba3x packages.

Note that samba3x was a "technology preview" from RedHat, which means that it offered little support for it. This has changed, and it's now a supported package in RHEL 5.

yum erase samba samba-common

yum install samba3x samba3x-client

You’ll have to re-do your configuration, so it might be worthwhile to back up your /etc/samba/smb.conf file.

yum install samba3x samba3x-client

Sunday, April 25, 2010

Simple Method to Validate Data Read at the beginning of an SSIS Package

We’ve looked at using transactions in an SSIS package to ensure that, for instance, the read data step in your package doesn’t fail after you’ve deleted the data it’s set to replace.

This is a very effective and really useful method, and it’s exceedingly flexible.

If your project spans multiple servers, though, this will require changes to the DST service settings that you might not be able to make.

There’s a simpler way, though it doesn’t offer all of the protections of wrapping your package in a transaction. We’ll take a look at that, here.

Friday, April 23, 2010

Utilizing Transactions in SSIS to Rollback After a Failed Import

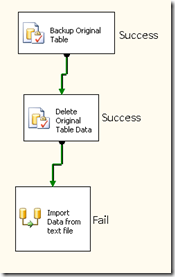

SSIS is used primarily to import data into a database, particularly from flat files, but also from other databases. The typical SSIS  package for doing this task looks something like the picture at the left: backup the current data, delete the current data from the destination table, and then import the data from the source.

package for doing this task looks something like the picture at the left: backup the current data, delete the current data from the destination table, and then import the data from the source.

This is all good, and it works well. When it works. The problem is that if there’s a problem with reading the data from the source (say, the credentials for the source database changed), you’ve already blown away the current (production) data. The job fails, and the data remains missing.

package for doing this task looks something like the picture at the left: backup the current data, delete the current data from the destination table, and then import the data from the source.

package for doing this task looks something like the picture at the left: backup the current data, delete the current data from the destination table, and then import the data from the source.This is all good, and it works well. When it works. The problem is that if there’s a problem with reading the data from the source (say, the credentials for the source database changed), you’ve already blown away the current (production) data. The job fails, and the data remains missing.

Thursday, April 1, 2010

Disabling ASR on a Windows Server

ASR stands for automatic server recovery. It's a service whereby the iLO features of a HP server will reboot it, should it detect that something has gone wrong with the operating system. Good idea, except when the detected failure isn't really a failure. Or you want to be able to troubleshoot the problem while it's occurring.

The most straightforward method for changing this setting is to boot the server into the BIOS setup (F9) screen, and browse to Server Availability –> ASR Status.

Sometimes, you’d like to make this change without downtime, however. It appears it’s possible. Unfortunately, it also appears that a reboot, at some point, still is necessary.

The most straightforward method for changing this setting is to boot the server into the BIOS setup (F9) screen, and browse to Server Availability –> ASR Status.

Sometimes, you’d like to make this change without downtime, however. It appears it’s possible. Unfortunately, it also appears that a reboot, at some point, still is necessary.

Thursday, January 14, 2010

Adding an Oracle home to an agent inventory

When an Oracle inventory is saved in a non-standard location, the Oracle Grid Control agent can be unable to enumerate the software that is in that Oracle Home. This is true even when it can find the home itself.

In OEM, you’ll run into an error like that below when you click on the home in the Targets list:

We’ll use TESTSRV2 as a troubleshooting example.

In OEM, you’ll run into an error like that below when you click on the home in the Targets list:

Error Could not find Oracle Home <ORACLE_HOME>

in the inventory collected for <hostname>We’ll use TESTSRV2 as a troubleshooting example.

Locate the oraInst.loc file

The oraInst.loc file contains the inventory for all of the Oracle software. Normally, Oracle maintains a single copy of this file, but when one is saved in a non-standard directory, it can get left out.Monday, January 4, 2010

Oracle Database loses its OEM configuration after a Cold Backup

This was an annoying problem that took awhile to track down. In short: after our scheduled cold backups, an Oracle (11g) database would lose its configuration in Oracle Enterprise Manager. It would present a "Metric Collection Error" that would go away after reconfiguring the database. The fix, as it turned out, was pretty simple, but it took awhile to tease out. The problem was that the trace directory (bdump in 10g) was too full. Specifically, the metric collection (the process by which OEM gathers data about the database) was timing out. We ruled out performance problems on the database side; the system is not utilized much at all. Instead, we discovered that because there were a lot of files in the trace directory (> 31k), it was taking a long time for the OEM agent to get to the alert log, which is one of the metrics that it collects. This was hinted at in the emagent.trc file:

2010-01-04 13:01:53,087 Thread-47647632 ERROR TargetManager: TIMEOUT reached in computing dynamic properties for target TESTDB,

oracle_database::compute timings were [decideIncludeDB:0-0] [SystemTablespaceNumber:0-0] [SysauxTablespaceNumber:0-0 ...

[DeduceAlertLogFile:1-1] [GetCPUCount:1-1] [EnabledFeatures:1-1] [GetOSMInstance:1-1] [GetNLSParam:1-1] [GetAdrBase:1-(1)]

So you can see above that one of the things it was trying to do was get at the alert log. It took a long time to enumerate all of the small files in the trace directory, so we shut down the instance, cleared out the trace directory, and restarted the instance. That took care of the problem. In troubleshooting this problem, we also increased the dynamic properties timeout setting (dynamicPropsComputeTimeout_oracle_database) in the emd.properties file (in [agent_home]/sysman/config), changing the value from the default (120) to a larger setting (240). That did not help, though it's a good troubleshooting step, should you run into a similar problem.

oracle_database::compute timings were [decideIncludeDB:0-0] [SystemTablespaceNumber:0-0] [SysauxTablespaceNumber:0-0 ...

[DeduceAlertLogFile:1-1] [GetCPUCount:1-1] [EnabledFeatures:1-1] [GetOSMInstance:1-1] [GetNLSParam:1-1] [GetAdrBase:1-(1)]

Subscribe to:

Posts (Atom)